Typically, the value is between 3 and 10. Therefore, the history depth cannot be too small or too large as the computation can be too slow.

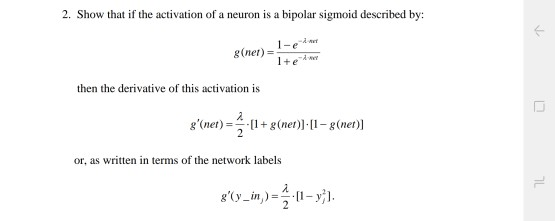

When the number of iterations is larger than the history depth, the Hessian computed by L-BFGS is an approximation. When the number of iterations is smaller than the history depth, the Hessian computed by L-BFGS is accurate. The number of historical copies kept in L-BFGS solver is defined by LBFGS_HISTORY_DEPTH. It allows us to model a class label or score that varies non-linearly with independent variables. L-BFGS is used to find the descent direction and line search is used to find the appropriate step size. An activation function is used to introduce non-linearity in an artificial neural network. This method only needs limited amount of memory. This method uses rank-one updates specified by gradient evaluations to approximate Hessian matrix. Activation function determines if a neuron fires as shown in the diagram below. Well the activation functions are part of the neural network. In 1: import numpy as np import matplotlib.pyplot as plt import numpy as np. Oracle Data Mining implements Limited-memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) together with line search. In this post, we will go over the implementation of Activation functions in Python. An optimization solver is used to search for the optimal solution of the loss function to find the extreme value (maximum or minimum) of the loss (cost) function.

0 kommentar(er)

0 kommentar(er)